INVESTIGATING STATISTICAL CONCEPTS, APPLICATIONS, AND METHODS

NOTES FOR INSTRUCTORS

Chapter 1 Chapter 2 Chapter 3 Chapter 4 Chapter 5 Chapter 6

General Notes:

We envision the materials as being very flexible in how they

are used. You may choose to lead

students through many of the investigations together as a class, but we also

encourage you to give students time to work through some questions on their own

(or better in pairs) and then debrief with the students afterwards. If

you do have students work through investigations largely on their own, it’s

very important to conduct a wrap-up discussion at the end of class, and/or at

the beginning of the next class, in which you make clear what the “morals” of

the investigations were. In other words, summarize for students what they

were supposed to have learned through the investigations and what they are

responsible for knowing, making sure they are reading the additional exposition

in the text as well. These wrap-up discussion times are also ideal for

inviting students’ questions, because they will have wrestled with the ideas

enough to know what the issues are and where their understanding is shaky. You may wish to collect students’

answers to just a few of the questions in an investigation to read over and

give feedback before the next class session.

The practice problems are intended to provide students with basic review

and practice of the most recent ideas between class periods. This will help structure their time outside

of class, and provide a way for you and the students to informally assess their

understanding and provide feedback. You

may choose to collect and grade these as homework problems or use them more to

motivate initial discussion in the next class period. You can also consider including a

“participation” component in your syllabus to include effort if your evaluation

will be more informal. Solutions have

been posted online. They are password

protected giving the instructor the option of giving students direct access or

not. These problems also work well in a

course management system such as Blackboard or WebCT for more automatic

submission and feedback.

You may also wish to supplement some of the material in the

book, e.g., bringing in recent news articles for discussion, or assigning data

collection project assignments. We think

students will find the ISCAM

investigations interesting and motivating, but there will also be time to share

other examples as well. Some students

prefer to read through examples worked out in detail and we have provided at

least one such example at the end of each chapter. If you do bring in your own material, we do

caution you to try to remain consistent with the text in terminology, notation,

and sequencing of ideas. Some of this

material and sequencing may be new to you as the instructor and may take a

while to get used to. Keep in mind that

the material you think is usually introduced at different points in the course

will be coming eventually.

We have written the materials assuming students will have

easy access to a computer, and we make increasing use of technology as the

course progresses. We have taught the course with daily access to a computer

lab, but believe it will also work with less frequent visits to a computer lab

and/or more instructor demonstrations (using a computer projection

system). If the students do not have

frequent access to computers during class, you may wish to assign more of the

computer work to take place outside of class.

We do provide detailed instructions for all of the computer work

(Minitab, Excel, java applets), but you may still want to encourage students to

work together. We have also assumed use

of Minitab 14 but at the Minitab Hints page

will try to outline where you will have to make adjustments to use Minitab 13

as well as suggestions for making additional use of Minitab 14’s new

capabilities (e.g., automatic graph updating).

Even with heavy use of computers, it is also nice to have some days

where you focus less on the computers to give students a chance to ask

questions on the larger concepts in the material and even work a few

calculations “by hand.” Student will use

a calculator on a daily basis as well.

The following elements will be found in each chapter:

Investigations:

These are intended to be “covered” during the lecture period, either as note

pages for the class to complete together or as worksheets for students to work

on individually or in pairs. Often, a section can correspond to one 50-60

minute class period.

Practice

Problems: These are intended as quick reviews of the basic ideas and

terminology of the previous investigation(s) and can often be assigned between

class periods. They can be located by

the small blue page bar.

Explorations:

These are a bit more open-ended explorations of additional statistical content

(e.g., uncovering mathematical properties of odds ratio vs. relative risk,

deeper consideration of sampling plans, properties of confidence interval

methods based on different degrees of freedom).

The explorations typically involve heavy software usage and work well as

“lab assignments” that students can complete inside or outside of class.

Examples:

Each chapter contains at least one example worked out for students to see the

solution approach in detail. The pages

on which they occur have a long blue edge bar.

Chapter

Summary and Technology Summary: These provide a review list of the main

concepts covered in the chapter as well as the basic computer commands that

will be used in subsequent chapters.

Exercises:

At the end of each chapter is a large set of exercises covering material from

throughout the chapter. The exercises

include a combination of conceptual questions, application exercises, and

mathematical explorations.

References:

A list of the study references for all investigations, practice problems and

exercises appears at the end of each chapter.

In aiming to make the materials easy to navigate, we have

adopting the following numbering scheme:

Section x.y refers

to the yth section in

chapter x

Investigation x.y.z

refers to the zth

investigation of the yth

section in chapter x

Practice Problem x.y.z

refers to the zth problem in Chapter x, Section y

Exploration a.b refers

to an Exploration in Chapter a,

Section b.

If multiple

explorations occurs in a section, they are given a third number as well.

Example c.d is the

dth example of the cth chapter and is located at

the end of the chapter (before the exercises).

Prologue: Traffic

Deaths in Connecticut

Timing: Taking

roll, explaining the syllabus, telling students a bit about what the course

would be like, and then going through prologue together (giving them a chance

to think about the issues first) took about 50 minutes. You might consider

asking them to read some of the background information and even answer the

first few questions in Investigation 1.1.1 before arriving to the next class

period.

The goal of the Prologue is to introduce them to some key

concepts and ways of thinking statistically that will hopefully recur

throughout the course. It’s also

important to get them used to thinking about the ideas of their own first

before you discuss them in class.

Students can usually provide very good answers to these questions and

you should summarize by reminding them how important it is to make “fair”

comparisons.

Section 1.1:

Summarizing Categorical Data

Timing: Answering

additional questions (e.g., explaining the different elements of the text) and

having them work through Investigation 1.1.1 took about 50 minutes. Students are asked to make a graph in Excel

but that can be easily moved to outside of class (perhaps after an instructor

demonstration).

Some additional information about the study in Investigation

1.1.1:

- You might consider showing students a copy of the journal

article to demonstrate its authenticity.

You can also find some links on the web to the ensuing court case. A recent one may still be here

or here.

- In May, 2000: eight persons

who had worked at the same microwave-popcorn production plant were reported to

have bronchiolitis obliterans. No

recognized case was identified in the plant.

Therefore, they medically evaluated current employees and assessed their

occupational exposure in Nov. 2000.

- They used a combination of

questionnaires and spirometric testing.

They also compared information to the National Health NE Survey

- The results here focus on the

results of the spirometric testing: 31 people had abnormal results, 10 with low

FVC (forced vital capacity) values, 11 with airway obstruction, and 10 with

both airway obstruction and low FVC.

- Diacetyl is the predominant

ketone in artificial butter flavoring and was used as a marker of

organic-chemic exposure

- They tested air samples and

dust samples from various areas in the plant. These areas included

Plain-popcorn packaging line,

bag-printing areas, warehouse, offices, outside

Quality control

or maintenance

Microwave-popcorn packaging lines

Mixing room

The first group is considered

“non-production” so lower exposure but they also looked at how long employees

had worked in different areas to classify them as “high exposure” and “low

exposure.”

In (e), get students to tell you about their description of

the graph – solicit descriptions from several people. Make sure the descriptions are in context and

include the comparison. You will

probably be able to tell them that all the responses were good and that one

distinction between statistics and other subjects is that there can be multiple

correct answers. When students offer

suggestions about reasons for the difference in the groups, make sure they

discuss a factor that differs between the two groups. So saying “other health issues” isn’t enough,

but a better answer is saying that those who worked in certain areas of the

plant may have different SES status than those who work in the production areas

of the plant or that they may be more likely to live in the country which has

different air quality, etc. Really get

them to suggest the need for comparison, either to people outside the plant or

to people in different areas of the plant.

Also build up the idea of not just comparing the counts but converting

to proportions first.

In (i), you might also want to ask students to calculate the

relative risk for (h) and see that it turns out different than for (c), even

though the difference in proportions is the same.

In (k), we encourage you to go through the odds ratio

calculations. You might consider asking

a student in the class to define odds, but you need to build up the odds ratio

slowly and always encourage them to interpret the calculation correctly,

because precisely interpreting the odds ratio is tricky for most students and

requires much practice.

Page 7: It’s important that students get a chance to

practice with the vocabulary soon as it is not as easily mastered as they may

initially think. You especially need to

watch that they state variables as variables.

Too often they will want to say “lung cancer” instead of describing the

entire variable (“whether or the person has lung cancer”). Or they will slip into summaries like “the

number with lung cancer.” Or they will

combine the variables and the observational units: “those with lung

cancer.” We strongly recommend trying to

get them to think of the variable as the question asked of each observational

unit.

The practice problems are intended to get students to work

more with variables. Much of the

terminology will be unfamiliar to them or they will have other “day-to-day” definitions

so it is important to “immerse” them into the vocabulary and allow them to

practice it often. We suggest beginning

the next class by discussing these problems, especially 1.1f and 1.1.2. We highly encourage you to either collect

students’ work on the practice problems (reading through and commenting on

their responses) and/or to briefly discuss them at the beginning of the next

class period. We envision these as being

a more informal, thought provoking, self-check form of assessment.

We have included “section summaries” at the end of the

sections. This is a good place to ask

students if they have questions on the material so far. You might also consider adapting these into

bullet points to recap the previous class period at the start of the next class

period. You may want to remind students

occasionally that they should read all of the study conclusion, discussion, and

summary sections carefully; some students get in the habit of working through

the investigations by answering questions but do not “slow down” enough to read

through and understand the accompanying exposition.

Section 1.2:

Analyzing Categorical Data

Timing: Students

were able to complete Investigation 1.2.1 and 1.2.2 in approximately 60

minutes. We did Investigation 1.2.1 mostly

together but then students worked on Investigation 1.2.2 in pairs. You may wish to have students complete some

of the Excel work outside of class (including for Investigation 1.2.1 prior to

coming to class). Exploration 1.2 makes

heavy use of Excel but no other technology.

Investigation 1.2.1 gives students immediate practice in

applying the terminology of Section 1.1.

We strongly encourage you to allow the students to work through these

questions, at least through (j), on their own first. Question (c) asks students to use Excel, but

again they could do that outside of class or you could demonstrate it for

them. Students will struggle and you

need to visit them often to make sure they are getting the answers correct

(e.g., how they state the variables, whether they see “amount of smoking” as

categorical, and calculation of the odds-ratios). The odds-ratios questions are asked to

encourage them to treat having lung cancer as a success and putting the non-smokers

odds in the denominator. This ensures

the odds-ratio is greater than one and treats the non-smokers as the reference

group to compare to. The main criticism

we expect to hear about in (j) is age but even after the odds-ratios were

“adjusted” for age there could be other differences between the groups again,

e.g., socio-economic status, diet, exercise that are related to both smoking

status and occurrence of lung cancer.

Question (l) is a subtle point but important for students to

think about. The point here is that this

proportion is not a reasonable estimate because the experimenters fixed the

number of lung cancer and control patients by design (i.e., a case-control

study). In this text, we tend to

distinguish between the types of study (case-control, cohort,

cross-classification) and the type of design (retrospective, prospective). These are not clear distinctions and you may

not wish to spend too long on them. It

is much more important for students to distinguish between observational

studies and experiments, but also always considering the implications of the

study design on the final interpretations of the results. Questions (m) and (n), about when we can draw

cause/effect conclusions and when we can generalize results to larger

population, are important ones that will arise throughout the course, so it’s

worth drawing students’ attention to them.

In particular, we want students to get into the habit of seeing these as

two distinct questions, answered based on different criteria, to always be

asked when summarizing the conclusions of a study.

Investigation 1.2.2 provides students with more practice and

gets them to again think in terms of association. They should be able to tell you some

advantages to the prospective design over the retrospective design (e.g., not

relying on memory, seeing what develops instead of taking people who are

already sick). However this does not

take into account any of the other possible confounding variables or that they

only selected healthy, white men initially.

You may want to pre-create the Excel worksheet for them and then have

them open it and start from there.

The Excel Exploration can be done inside or outside of

class. I had students finish it in pairs

outside of class and then turn in a report of their results. They should see that the odds-ratio and the

relative risk are similar when the baseline risk is small and that they can be

very different from 1 even for the same difference in proportions. This is also the first time they really see

that the OR and RR are equal to one when the difference in proportions is

zero. We encourage you to have them view

the updating segmented bar graph throughout these calculations to also see the

changes visually. This exploration is

essentially playing with formulas but allows them to come to a deeper

understanding of the formulas, how to express them, and hopefully how they are

related. Some issues you might want to

ask them about afterwards (in class or in a written report) include:

- when will RR and OR be similar in value (you can even lead

a discussion of the mathematical relationship OR = RR(1-p2)/(1-p1)

)

- when are RR and OR more useful values to look at than the

difference in proportions (primarily when the success proportions are very

small or very large)

- when will RR and OR be equal to 1 and what does that imply

about the relationship between the variables/the difference in proportions

These comparisons should fall out if they follow the

structure of the examples and what changed with each table. You also need to decide how much of the Excel

output you want them to turn in.

As you summarize these first 3 investigations you might even

want to warn them that they won't see RR and OR too much for a while but the

other big lessons they should be taking from this early material is the

importance of the study design and always using graphical and numerical

summaries as they explore the data. Students should also be getting the idea

that statistics matters and that statistics is important for investigating

important issues.

Additionally you can highlight the three studies they have

seen so far (Popcorn, Wynder and Graham, Hammond

and Horn) and compare and contrast them.

For example:

|

Popcorn and lung disease

|

Wynder and Graham

|

Hammond

and Horn

|

|

Defined subjects as high/low exposure, classified airway

obstruction

|

Found subjects with and without lung cancer, classified

smoking status (case-control, retrospective)

|

Followed subjects, found level of smoking, whether died of

lung cancer (cross-classified, prospective)

|

|

Meaningful to examine proportion with airway obstruction

|

Not meaningful to examine proportion with lung cancer

|

Meaningful to examine proportion who died of lung cancer and proportion of smokers

|

|

Similar number in each explanatory variable group

|

Similar number in each response variable group

|

|

|

May not be representative

|

Controlled interviewer behavior

|

Not much control (22,000 ACS volunteers)

|

You may wish to summarize why

the “invariance” of odds ratios helps to explain why they are preferred in many

situations instead of the easy to interpret relative risk. For example, with odds ratio, it does not

matter which category is considered the success.

Section 1.3 Confounding

Timing: This section will probably take approximately

45-50 minutes. You may choose to do more leading in this section in the

interest of time. No technology is used.

The initial steps of Investigation 1.3.1 should start

feeling fairly routine for students by this point. You might consider asking them to complete up

to a certain question before they come to class. It is also fun to ask them whether or not

they wear glasses and if they remember the type of lighting they had as a

child. The key point is of course the

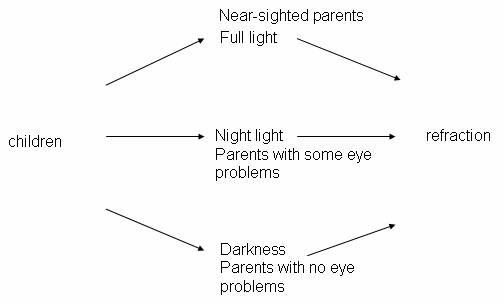

distinction between association and causation and through class discussion

students should be able to suggest some reasonable confounding variables. Where to be picky is to make sure that their

confounding variable has a clear connection to the response (eye condition) and

that there is the differentiation in this variable between the explanatory

variable groups (type of lighting).

Students tend to describe the connection to the response but not to the

explanatory variable. It can be helpful

to ask students to think of confounding as an alternative explanation, as

opposed to a cause/effect explanation, for the association between the

variables. You might consider having

them practice drawing an experimental design schematic (formally introduced

later in the course), along with matching the different confounding variable

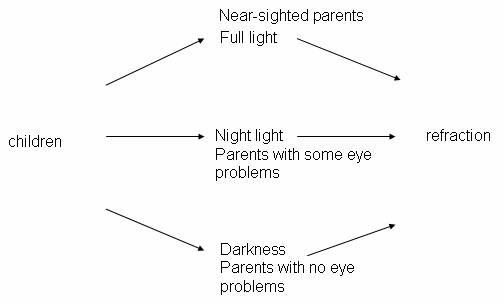

outcomes with the different explanatory variable outcomes. For example:

The practice problems at the end of this investigation are a

little more subtle than earlier ones and it will be important to discuss them

in class and ensure that students understand the two things they need to

discuss to identify a variable as potentially confounding (its connection to

both the explanatory and the response variable).

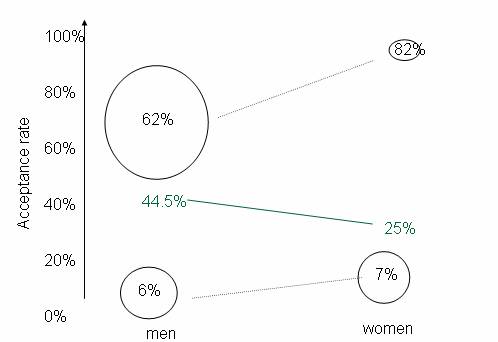

In Investigation 1.3.2, we have chosen to treat Simpson’s

Paradox as another confounding variable situation. This investigation goes into the mathematical

formula as another way to illustrate the source of the paradox (the imbalance in

where the women applied and an imbalance in the acceptance rates of the two

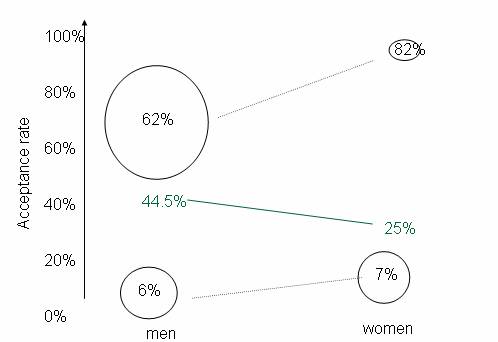

programs). You might also consider

showing them a visual illustration such as:

where the size of the circles are intended to convey the

sample sizes in each category and thus their overall “weight” in the overall

calculation.

Students will probably struggle a bit with (i) and (j) but

hopefully can see the relationships if taken one step at a time.

Practice problem 1.3.3 will help them see the paradox

arising in a different setting. Even

when they see and pretty much understand what’s going on, students often

struggle to provide a complete explanation of the cause of the apparent

paradox. A very good follow-up question

is to ask them to construct hypothetical data for which Simpson’s Paradox

occurs as in Practice problem 1.3.4.

It will be important to convey to students exactly what

“skills” and “concepts” you want them to take away from this

investigation. If you want to focus on

the “weighted average” idea (which has some nice reoccurrences later in the

course), students will probably need a bit more practice. In summarizing these investigations with

students, we are hoping they have motivated the need for more careful types of

study designs that would avoid the confounding issues. Students often have an intuitive

understanding of “random assignment” but this will be developed more formally

in the next section.

Section 1.4:

Designing Experiments

Timing/Materials: This

section will probably take approximately 45-50 minutes. Some of the simulations

could be assigned to out of class. You

will need index cards for the tactile simulation and access to an internet

browser. Students have access to all of

the data files and java applets page here and

through the CD that comes with the text.

Much of Investigation 1.4.1 will probably be familiar to them and we

recommend going through it with students rather quickly.

In Investigation 1.4.1, students see yet another example of

the limitations of an observational study and are usually very good at

identifying potential confounding variables.

It’s fun (and motivating) to ask students if they know whether their

institution has a foreign language requirement and what might be the reasons

for that requirement. Deciding whether

foreign language study directly increases verbal ability (as posited by many

deans) leads into the idea of an experiment and most students appear to have

heard these terms, including placebo, before.

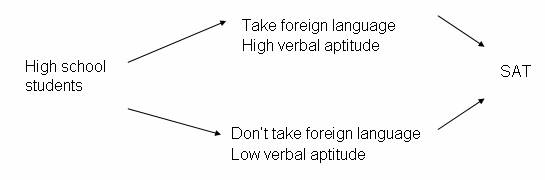

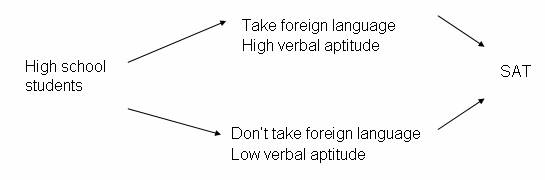

You may also wish to

discuss with them the schematic for the original observational study and the

potential “verbal aptitude” confounding variable:

In Investigation 1.4.2, we strive to help students see the

benefits of randomization. We have

students begin with a hands-on simulation of the randomization process. We feel this engages the students and gives

them a concrete model of the process. We

encourage you to have the students come to the board to collectively create the

dotplot of their results in (d).

Students could conduct the randomization outside of class and bring in

their results but we feel this concept is important enough that you may prefer

to do so in class. Students then

transition to an applet to perform the randomization process many, many more

times. Hopefully the prior hands-on

simulation and the graphics of the applet will help them connect to the

computer simulation (and reinforce that they are mimicking the randomization

process used by the researchers).

Nevertheless, be aware that some students click through the applet

quickly without stopping to think about what it reveals. You might want to ask a question like “Where

would an outcome show up in the dotplot if a repetition was unlucky and did not

balance out the heights between the groups?”

They should be able to work through the applet questions fairly quickly

and then you will want to emphasize that randomization “evens out” other lurking or extraneous variables between

the groups, typically not even recorded or seen by the researchers, as

illustrated by the “gene” and “x” variables in the applet. It is important to emphasize to students

that while we often throw around the word “random” in everyday usage, achieving

true “statistical randomness” takes some effort and should not be

short-circuited.

Practice problem 1.4.1 tests their understanding of what

constitutes an experiment and we prefer to focus on the imposition of the

explanatory variable (which was not done here).

Practice problem 1.4.2 asks them to compare other types of randomization

schemes and Practice problem 1.4.3 highlights that experimental studies are not

always feasible. These questions are

especially good for generating class discussion (rather than to suggest strict

correct and incorrect solutions).

Investigation 1.4.3 is listed as optional or may be

presented briefly. It continues to use

the applet to have students think about the concept of “blocking.” We have chosen to discuss a rather informal

use of “blocking” in that students are first manually splitting subjects into

homogenous groups. The applet conveys the idea that if you actively balance out

factors such as gender between the two groups, that will ensure further balance

between the groups on some other variables as well (those related to gender,

like height). We chose not to emphasize

this concept strongly but did want students to think about the advantages (and

disadvantages) of carrying out the experimental design on a more homogeneous group

of subjects.

At this point in the course, you might also consider

assigning a data collection project where students work with categorical

variables and consider both observational and experimental studies. An example set of project assignments is

posted here.

Section 1.5:

Assessing Statistical Significance

Timing/Materials: With some review of the idea of confounding

variables at the beginning, this section takes approximately 60 minutes. One

timing consideration is that we have students do a second tactile

simulation. This simulation is very

similar to that in Section 1.4 but here focuses on the response instead of the

explanatory variable. Still, you may want

to make sure these simulations occur on different days. We do see value in having students do both as

they too easily forget what the randomization in the process is all about (and

how we can make decisions in the presence of randomness). This simulation also ties closely to an

applet and helps transition students to the concept of a p-value. You will need

pre-sorted playing cards or index cards and access to an internet browser. We pre-sort the playing cards into packets of

24, with 11 black ones (clubs/spades) representing successes and 13 black ones

(hearts/diamonds)) representing failures, but you could also use index cards

and have students mark the successes and failures themselves.

The goal in this section is to see the entire statistical

process, from consideration of study design, to numerical and graphical summaries,

to statistical inference. Students learn

about the idea of a p-value by

simulating a randomization test. One way

to introduce this section is to say that even after we’ve used randomization to

eliminate confounding variables as potential explanations for an association

between the explanatory and response variables, another explanation still

exists: maybe the observed association is simply the result of random

variation. Fortunately, we can study the

randomization process to determine how likely this is. While in the previous section we focused on

how randomization evens out the effects of other extraneous variables, here the

focus is on how large the difference in the response variable might be just due

to chance alone, if there were really no difference between the two explanatory

groups. You will want to greatly

emphasize the question of how to determine whether a difference is

“statistically significant.” Try to draw

students to think about “what would happen by chance,” even before the simulation,

as a way to answer this question (around question (f)). At some point (beginning of class, around

question (f), end of class) you may even want to detour to another example to

illustrate the logical thinking of statistical significance. One demonstration that we have found

effective is to have a student volunteer roll a pair of dice that look normal

but are actually rigged to roll only sums of seven and eleven (e.g., unclesgames.com,

other

sources). Students realize after a few rolls that the outcomes

are too surprising to have happened by chance alone for normal dice and thus

provide compelling evidence that there is something fishy about the dice. It is important for students to think of the

randomization distribution as a “what if” exploration to help them analyze the

experimental results actually obtained.

For part (i) of Investigation 1.5.1, we have students create

a dotplot on the board, with each student placing five dots (one for each

repetition of the randomization process) on the plot. You can also have students turn in their

results for you to compile between classes or have them list their results to

you orally (or as you walk around the room) while you (or a student assistant)

type them into Minitab or place them on the board yourself. In part (s), it’s instructive to ask several

students to report what they obtain for the empirical p-value, because students should see that while these estimates

differ from student to student, they are actually quite similar.

The data described in Investigation 1.5.1 have been slightly

altered. In the actual study, 11 observers

were assigned to Group A and 12 to Group B, however we preferred that these

column totals were not the same as the row totals. Students should find the initial questions

very straight forward and again you could ask them to complete some of this

background work prior to the class meeting.

Some notes on the applet:

- holding the mouse over the deck of cards reveals the

composition of the deck (students should note the 13 red and the 11 black

cards).

- with 1000 repetitions, when you “show tallies” the values

tend to crash a bit, but students should be able to parse them out.

- the “Difference in Proportion” button is to help students

see the transition between this distribution and the distribution of  A –

A –  B that they

will work with later but it may not be worth addressing at this point in the

course.

B that they

will work with later but it may not be worth addressing at this point in the

course.

- we encourage you to continually refer to the visual image

of the empirical randomization distribution given by the applet when

interpreting p-values.

One distinction in terminology that you may want to

highlight for students is that the term “randomization distribution” refers to

all possible randomizations, while the phrase “empirical randomization

distribution” means the approximation to the randomization distribution,

generated by a simulation that repeats the randomization process a large number

of times.

There are some important points for students to be

comfortable with in the discussion on p. 50.

In particular, we want students to realize throughout the course how the

p-value presents a measure of the

strength of evidence along a continuous scale.

You might tell students to think of this as a grayscale and not just

black/white. You will also want to

emphasis that the p-value measures

how often research results at least this extreme would occur by chance if there was no true difference between the

experimental groups. You might also

want to remind students that the terminology introduced in this investigation

will be used throughout the rest of the course.

Section 1.6: Probability

and Counting Methods

Timing/Materials:

This section, consisting of two investigations, should take approximately 65

minutes, but could be much less if your students are already familiar with

combinations. You may also choose to supplement with some other probability

applications and/or discussion of lotteries.

An applet is used in both investigations, and you may want to use Excel

in Investigation 1.6.2.

At this point, quantitatively inclined students are often

chomping at the bit for a more analytic approach for determining “exact p-values” that circumvents the need for

the approximating simulations.

Investigation 1.6.1 introduces them to the idea of probability as the

long-run relative frequency of an outcome in a rather silly, but memorable,

“application.” We apologize for the

context, but students do tend to remember it and the investigation is closely

tied to a java applet that graphs the behavior of the empirical probability

over the number of repetitions. It will

be important to have longer discussions on what the applet’s pictures represent

(either as a class or a writing assignment).

This investigation also introduces the idea, and parallel

interpretation, of expected value. We

emphasize two aspects of interpreting expected value: long-run and average;

some students tend to ignore the “average” part. You will especially want to recap the idea of

a random variable with your students.

At this point you may choose to introduce some other interpretations

of probability, e.g., subjective probability, to introduce them to the

diversity of uses of the term. Also,

while the calculations in this course often make use of the equal probability

of outcomes from the randomization, you might want to caution them to not

always assume outcomes are equally likely.

The following transcript from a Nightline

broadcast a few years about may help bring home the point:

In Investigation 1.6.2, students use this basic probability

knowledge to calculate a probability using combinations, emphasizing the

distinction between an empirical and an exact probability. You may want to show

your students how to do these combination calculations on their calculator as

well as in Excel. We often also advise

students that we are more interested in their ability to set up the calculation

(e.g., on an exam). We also strive to

keep the “statistical motivation” of these calculations in mind – how often do

experimental results as extreme as this happen “by chance.” In using the Two-way Table Simulation applet,

the default calculated p-value is

always calculated as the probability of the observed number of successes in

Group A and below. To change this, using

the version of the applet on the web (not the CD), first press the “<=”

button to toggle to “>=”. Then the

empirical p-value button will be

found by counting the number of successes in Group A observed or higher. In this problem, we ask students to find “as

many successes” in the coated suture group, you may want to motivate this

direction with your students.

Section 1.7: Fisher’s

Exact Test

Timing: This section should take about 100 minutes. You may also wish to provide more

practice carrying out Fisher’s Exact Test and discussing the overall

statistical process. In Investigation

1.7.1, students are asked to create a segmented bar graph and calculate p-values (meaning technology is helpful

but not essential). They are also asked

to compare back to their simulation results in Investigation 1.5.1.

Investigation 1.7.1 brings the statistical analysis full

circle by formally introducing the hypergeometric probabilities to calculate p-values for two-way tables and pulling

together the ideas of the previous two sections. It first continues the analysis of the

“Friendly Observers” study, for which students have only approximated the p-value so far, and asks them to

calculate the exact p-value now that

they are knowledgeable of combinations (and the addition rule for

probabilities). The questions step them

through constructing hypergeometric probabilities. You may want to help them through this as a

class and then emphasize the structure of the calculations. It is also worth showing them that the same

calculation can be set up several different ways and arrive at the same p-value (as long as they are

consistent), e.g., top of p. 68. Many

students struggle with this, but it is worth helping them to understand,

because then they do not have to worry about which category to consider

“success” or “failure.” Students are

then asked to turn the calculations over to Minitab. We hope students will soon become comfortable

using Minitab to calculate these probabilities and we try not to spend too long

on the combinations calculations. We show several ways to arrive at the

calculations so that they may find the method they are most comfortable

with. You can also use Minitab or other

software to show them lots of graphs of the hypergeometric distribution for

different parameter values. The

investigation also reminds them of the idea of expected value and how it may be

calculated. Question (s) and (t) are

important for helping students use the complement rule with integer values to

calculate (upper-tail) p-values in

Minitab. This idea will occur quite

frequently in the course, and many students struggle with it, so it is

important to get them used to it quickly.

Beginning with question (u), Investigation 1.7.1 transitions into

focusing on the effect of sample size on the p-value. This gives students

additional practice while making a very important statistical point that will

recur throughout the course.

Investigation 1.7.2 gives them additional practice with

Fisher’s Exact Test but also brings up the debate of what the p-value really means with observational

data. We like to tell students that with

observational studies, a very small p-value

can essentially eliminate random variation as an explanation (for an observed

association between explanatory and response variables), but the possibility of

confounding variables cannot be ruled out.

Summary

Students need to be strongly encouraged to read the Chapter

Summary and the Technology Summary. If

the classroom environment is more “discovery-oriented,” students will need to

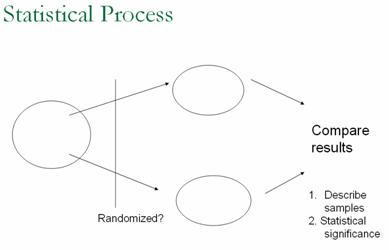

carefully organize their notes. You

should remind them that this course will be rather “cyclic” in that these ideas

will return and be built upon in later chapters. You might also consider showing students a

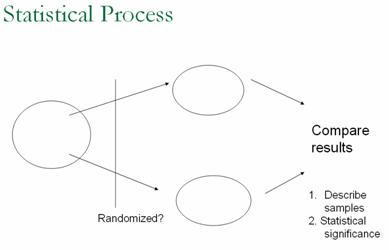

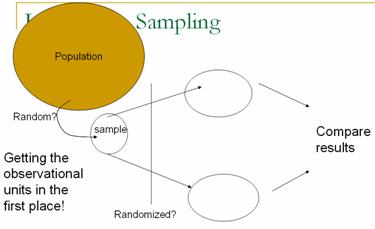

graphic of the overall statistical process and how the ideas they have learned

so far fit in, e.g.:

With these students,

we have had good luck asking them to submit potential exam questions as part of

the review process.

Chapter

2

This chapter parallels the previous chapter (considering of

data collection issues, numerical and graphical summaries, and statistical

inference from empirical p-values)

but for quantitative variables instead of categorical variables. The themes of considering the study design

and exploring the data are reiterated to remind students of their importance. Analyses for quantitative data are a bit more

complicated than for categorical data, because no longer does one number

suitably summarize a distribution by itself, and we also need to focus on

aspects such as shape, center, and spread in describing these

distributions. This also leads to

heavier use of Minitab for analyzing data (e.g., constructing graphs and

calculating numerical summaries) as well as for simulating randomization

distributions. If your class does not

meet regularly in a computer lab, you might want to consider having students

work through the initial study questions of several investigations, saving up

the Minitab analysis parts for when you can visit a computer lab. Or if you do not have much lab access, you

could use computer projection to demonstrate the Minitab analyses. Keep in mind that there are a few menu

differences if you are using Minitab 13 instead of Minitab 14 (see the powerpoint slides for Day 8 of Stat

212). One thing you will want to discuss

with your students is the best way to save and import graphics for your

computer setting. Some things we’ve used

can be found here.

Section 2.1:

Summarizing Quantitative Data

Timing/Materials:

This section covers graphical and numerical summaries for

quantitative data, and these investigations will take several class

sessions. Students will be using Minitab

in Investigations 2.1.3 (oldfaithful.mtw),

2.1.5 (temps.mtw), and 2.1.6 (fan03.mtw,the ISCAM webpage also provides access to the most recent season’s

data.). Instructions for replicating the

output shown in Investigation 2.1.2 (CloudSeeding.mtw)

are included as a Minitab Detour on p. 111.

Excel is used in Investigation 2.1.7 (housing.xls). Investigations 2.1.1 and 2.1.2 together

should take about 50-60 minutes.

Investigations 2.1.3, 2.1.4, and 2.1.5 together should take another 60

minutes or so. Investigation 2.1.6 could

take 40-50 minutes, and Investigation 2.1.7

could take 50-60 minutes. You might

consider assigning Investigation 2.1.6 as a lab assignment that students work

on in pairs and complete the “write-up” outside of class. Or you can expand on the instructions for

Practice Problem 2.1.7 as the lab-writeup assignment. Investigation 2.1.7 explores the mathematical properties of least squares estimation

in this univariate case and can be skipped or moved outside of class, perhaps

as a “lab assignment.”

Investigation 2.1.1 is meant to provoke informal discussions

of anticipating variable behavior. You

may choose to wait until students have been introduced to histograms (in which

case it could also serve to practice terminology such as skewness). One goal is to help students get used to

having the variable along the horizontal axis with the vertical axis

representing the frequencies of observational units. Furthermore, we want to build student

intuition for how different variables might be expected to behave.

Students usually quickly identify graphs 1 and 6 as either

the soda choice or the gender variable, the only categorical variables. Reasonable arguments can be made for either

choice. In fact, we try to resist

telling students there is one “right answer” (another habit of mind we want

them to get into in this statistics class that some students may not be

expecting, as well as that writing coherent explanations will be an expected

skill in this class). We tell them we

are more interested in their justification than their final choice, but that we

see how well they support their answers and the consistency of their

arguments. A clue could be given to

remind students the name of the course these 35 students were taking. This often leads students to select graph 1

as the gender variable, assuming the second bar represents a smaller proportion

of women in a class for engineers and scientists. Students usually pick graphs 2 and 3 (the two

skewed to the right graphs) as number of siblings and haircut cost. We do hope they will realize that graph 3,

with its gap in the second position and its longer right tail (encourage

students to try to put numerical values along the horizontal scale) is not

reasonable for number of siblings.

However the higher peak at $0 (free haircuts by friends) and the gap

between probably $5 and $10 does seem reasonable. (In fact, students often fail to think about

the graph possibly starting at 0.) We

also expect students to choose between height and guesses of age for graphs 4

and 5. Again, reasonable arguments could

be made for either, such as a more symmetric shape for height, as expected for

a biological characteristic? Or one

could argue for a skewed shape for height (especially if they felt the class

had a smaller proportion of women)?

Again, we evaluate their ability to justify the variable behavior, not

just their final choice. This

investigation also works well as a paired quiz but the habits of mind that this

investigation advocates were part of our motivation for moving it to first in

the section.

In Investigation 2.1.2 students are introduced to some of

the common graphical and numerical summaries used with quantitative data, while

still in the context of comparing the results of two experimental groups. We

present these fairly quickly, and we emphasize the subtler ideas of comparing distributions,

because we don't really want to pretend that these mathematically inclined

students have never seen a histogram or a median before! (Note: the lower whisker on p. 108 extends to

the value 1.) After the Minitab detour

(which they can verify outside of class), this investigation concludes by

having students transform the data. While not involving calculus, transforming

data is an idea that mathematically inclined students find easier to handle

than their more mathematically challenged peers. This part of the investigation can be skipped

but there are later investigations that assume they have seen this

transformation idea. You might also consider asking students to work on these

Minitab steps outside of class.

Practice 2.1.1 may seem straight-forward, but some students

struggle with it, and it does assess whether students understand how to

interpret boxplots.

Investigation 2.1.3 formally introduces measures of spread

and histograms. The data concern

observations of times between eruptions at Old Faithful. We have in mind that spread is a more

interesting characteristic than center for this distribution, because spread

relates to how consistent/predictable the time of the next eruption is. In fact, the 2003 distribution is worse for

tourists because the average wait time is much longer, but it is better in that

the wait times are much more predictable.

You may have students go to the website to look at current data and/or

pictures of geyser eruptions. One thing to insist on in their discussions of

the data is that they treat “IQR” as a number measuring the spread, not as a

range of values as many students are prone to do.

Investigation 2.1.4 asks students to think about how

measures of spread relate to histograms.

This is one of the rare times that we use hypothetical data, rather than

real data, because we have some very specifics points in mind. This is a “low-tech” activity that can really

catch some students in common misconceptions and you will definitely want to

give students time to think through (a)-(d) on their own first. The goal is to entice these students in a

safe environment to make some common errors like mistaking bumpiness and

variety for variability (as explained in the Discussion) so they can confront

their misconceptions head on. Our hope is that the resulting cognitive

dissonance will deepen the students’ understanding of variability. It will be important to provide students

with immediate feedback on this investigation.

We encourage taking the time to have students calculate the

interquartile ranges by hand as doing so for tallied data appears to be

nontrivial for them. This is a very

flexible investigation that you could plug into a 20-minute time slot wherever

it might fit in your schedule.

The actual numerical values for Practice 2.1.3 are below:

|

|

Jan

|

Feb

|

Mar

|

Apr

|

May

|

Jun

|

Jul

|

Aug

|

Sep

|

Oct

|

Nov

|

Dec

|

|

Raleigh

|

39

|

42

|

50

|

59

|

67

|

74

|

78

|

77

|

71

|

60

|

51

|

43

|

|

SF

|

49

|

52

|

53

|

56

|

58

|

62

|

63

|

64

|

65

|

61

|

55

|

49

|

Investigation 2.1.5 aims to motivate the idea of

standardized scores for “comparing apples and oranges.” While students may realize you are working

with a linear transformation of the data, we hope they will see the larger

message of trying to compare observations on different scales through

standardization. This idea of converting

to a common scale measuring the number of standard deviations away from the

mean will recur often. Practice 2.1.5

should help drive this point home. The

empirical rule is used to motivate an interpretation of standard deviation

(half-width of middle 68% of a symmetric distribution) that parallels their

understanding of IQR.

Investigation 2.1.6 gives students considerably more

practice in using Minitab to analyze data.

Students will probably need some help with questions (n)-(p) especially

if they are not baseball fans. These

questions can be addressed in class discussion where those that are baseball

fans can be the “experts” for the day.

Still, we also want students to get into the mental habit of playing

detective as they explore data. We find

Practice 2.1.7 helps transition the data set to one that applies more directly

to individual students. We encourage you

to collect students’ written explanations (perhaps in pairs) to provide

feedback on their report writing skills (incorporating graphics and

interpreting results in context). If

this practice problem is treated more as a lab assignment, you might consider a

20 point scale:

Defining MCI: 2 pts; Creating

dotplots: 2 pts; Creating boxplots: 2 pts; Producing descriptive statistics: 2

pts; Discussion: 8 pts (shape, center, spread, outliers); Removing one team and

commenting on influence: 3 points.

Exploration 2.1 leads students to explore mathematical

properties of measures of center, and it also introduces the principle of least

squares in a univariate setting. As we

mentioned above, this investigation can be skipped or used as a group lab

assignment. Questions (a) and (b)

motivate the need for some criterion by which to compare point estimates, and

questions (c)-(h) reveal that the mean serves as the balance point of a

distribution. Beginning in (k), students

use Excel to compare various other criteria, principally for comparing the sum

of absolute deviations and the sum of squared deviations. Students who are not familiar with Excel may

need some help, particularly with the “fill down” feature. Questions (o) and (p) are meant to show

students that the location(s) of the minimum SAD value is not affected by the

extremes but is affected by the middle.

Students will be challenged to make a conjecture in (q), but some

students will realize that the median does the job. Questions (t)-(w) redo the analysis for the

sum of squared deviations, and in (x) students are asked to use calculus to

prove that the mean minimizes SSD. This

calculus derivation goes slowly for most students working on their own, so you

will want to decide whether to save time by leading them through that. Practice 2.1.8 extends the analysis to an odd

number of observations, where the SAD criterion now has a unique minimum at the

median. Practice 2.1.9 asks students

to create an example based on the resistance properties of these numerical

measures and is worth discussing even if Exploration 2.1 is not assigned.

Section 2.2:

Statistical Significance

Timing/Materials: Students are asked to conduct a simulation

using index cards in Investigation 2.2.1, followed-up by creating and executing

a Minitab macro. This macro is used

again in Investigation 2.2.2 and then modified to carry out an analysis in

Investigation 2.2.3. This section might

take 75-90 minutes.

This section again returns to the question of statistical

significance, as in Chapter 1, but now for a quantitative response variable. Students will use shuffled cards and then the

computer to simulate a randomization distribution. However this time there will not be a

corresponding exact probability model (as there was with the hypergeometric

distribution from Chapter 1), because we need to consider not only the number

of randomizations but also the value of the difference in group means for each

randomization, which is very computationally intensive. We encourage you to especially play up the context

in Investigation 2.2.1, where students can learn a powerful message about the

effects of sleep deprivation. (It has been shown that sleep deprivation impairs

visual skills and thinking the next day, and this study indicates that the

negative effects persist 3 days later.)

The tactile simulation will probably feel repetitive, so you may try to

streamline it, but we have found students still need some convincing on the

process behind a randomization test. It

is also interesting to have students examine medians as well as means. In question (h) we again have students add

their results to a dotplot on the board.

Students then use Minitab to replicate the randomization

process. They do this once by directly

typing the commands in Minitab (question j), where you might want to make sure

that they understand what each line is doing. (One common frustration is that

if students mis-type a Minitab command, they cannot simply go back and edit it;

they need to re-type or copy-and-paste the edited correction at the most recent

MTB> prompt.) But then rather than

have to copy-and-paste those line 1000 times, they are then stepped through the

creation of a Minitab macro to repeat their commands and thus automate that

process. This is the first time students

create and use a Minitab macro, in which they provide Minitab with the relevant

Session commands (instead of working through the menus). Some students will pick up these programming

ideas very quickly, others will need a fair bit of help. You may want to pause a fair bit to make sure

they understand what is going on in Minitab.

If a majority of your students do not have programming background, you

may want to conduct a demonstration of the procedure first. The two big issues are usually helping

students save the file in a form that is easily retrieved later and getting

them into the habit of using (and initializing!) a counter. We suggest using a text editor (rather than a

word processing program) for creating these macro files so Minitab has less

trouble with them. In fact, Notepad can

be accessed under the Tools menu in Minitab.

It is also a nice feature that this file can be kept open while the

student runs the macro in Minitab. In

saving these files, you will want to put double quotes around the file

name. This prevents the text editor from

adding “.txt” to the file name. The

macro will still run perfectly fine with a .txt extension but it is a little

harder for Minitab to find the file (it only automatically lists those files

that have the .mtb extension – you would need to type *.txt in the File name

box first to be able to see and select the file if you don’t use the .mtb

extension). On some computer systems,

you also have to be rather careful in which directories you save the file. You might want students to get into the habit

of saving their files onto personal disks or onto the computer desktop for

easier retrieval. Remembering to

initialize the counter (let k1=1

at the MTB> prompt) before running the macro is the most common error that

occurs; students also need to be reminded that spelling and punctuation are

crucial to the functionality of the macro.

We encourage students to get into the habit of running the macro once to

make sure it is executing properly before they try to execute it 1000

times. These steps may require some trial

and error to smooth out the kinks.

In

this investigation, you will want to be careful in clarifying in which

direction to carry out the subtraction (in fact for (k), we suggest instead

using let

c6(k1)=mean(c5)-mean(c4)and then in part (m), using let c8=(c6>=15.92), then consider the area to right of +15.92 in the graph on p.

148). Indicator variables, as in

(m), will also be used extensively throughout the text. We do show students the results of generating

all possible randomizations in (q) to convince them of the intractability of

this exact approach and to sow the seeds for later study of the t distribution.

Investigations 2.2.2 and 2.2.3 provide further practice with

simulating a randomization test while focusing on two statistical issues: the

effect of within group variability on p-values

(having already studied sample size effects in Chapter 1) and the

interpretation of p-values with

observational data. Question (b) of

Investigation 2.2.2 is a good example of how important we think it is to force

students to make predictions, regardless of whether or not their intuition

guides them well at this point; students struggle with this one, but we hope

that they better remember and understand the point (that more variability

within groups leads to less convincing evidence that the groups differ) through

having made a conjecture in the first place.

The subsequent practice problems are a bit more involved than most, so

you may want to incorporate them more into class and/or homework.

Chapter

3

In this chapter, we transition from comparing two groups to

focusing on how to sample the observational units from a population. While issues of generalizing from the sample

to the population were touched in Chapter 1, in this chapter students formally

learn about random sampling. We focus

(but not exclusively) on categorical variables and introduce the binomial

probability distribution in this chapter, along with more ideas and notation of

significance testing. There is a new

spate of terminology that you will want to ensure students have sufficient

practice applying. In particular, we try

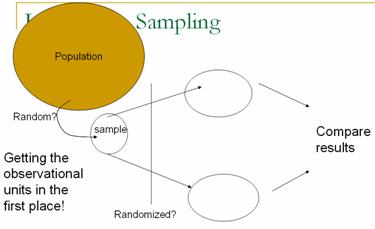

hard to help students clearly distinguish between the processes of random sampling and randomization, as well as the goals and implications of each.

Section 3.1: Sampling

from Populations I

Timing/Materials: Investigation 3.1.1 should take about one

hour. We encourage you to have students

use Minitab to select the random samples but they can also use the calculator

or a random number table (if supplied).

Investigation 3.1.2 can be discussed together quickly at the end of a

class period, about 15 minutes. An

applet is used in Investigation 3.1.3 and there are some Minitab questions in

Investigation 3.1.4. These two

investigations together probably take 60-75 minutes. You will probably want students to be able to

use Minitab in Investigation 3.1.5 which can take 30-40 minutes. Exploration 3.1 is a Minitab exercise

focusing on properties of different sampling methods (e.g., stratified

sampling) which could be moved outside of class (about 30 minutes) or skipped.

Investigation 3.1.2 and Exploration 3.1 can be regarded as optional if you are

short on time.

The goal of Investigation 3.1.1 is to convince students of

the need for (statistical) random sampling rather than convenience sampling or

human judgment. Some students want to

quibble that part (a) only asks for “representative words” which they interpret

as indicating representing language from the time or words conveying the

meaning of the speech. This allows you

to discuss that we mean “representative” as having the same characteristics of

the population, regardless of which characteristics you will decide to focus

on. Here we focus on length of words,

expecting most students to oversample the longer words. Through constructing the dotplot of their

initial sample means (again, we usually have students come to the front of the

class to add their own observation), we also hope to provide students with a

visual image of bias, with almost all of the student averages falling above the

population average. We hope having

students construct graphs of their sample data (in (d)) prior to the empirical

sampling distribution (in (k)) will help them distinguish between these

distributions. You will want to point

out this distinction frequently (as well as insisting on proper horizontal

labels of the variable for each graph – word length in (d) and average word

length in (k)). We think it’s especially important and helpful to emphasize

that the observational units in (d) are the words themselves, but the

observational units in (k) are the students’ samples of words- this is a

difficult but important conceptual leap for students to make. The sampling distribution of the sample

proportions of long words in (k), due to the small sample size and granularity

may not produce as a dramatic an illustration of bias, but will still help get

students thinking about sampling distributions and sampling variability. This investigation also helps students

practice with the new terminology and notation related to parameters and

statistics. We often encourage students

to remember that the population parameters are denoted by Greek letters, as in

real applications they are unknown to the researchers (“It’s Greek to

me!”). The goals of questions (r)-(w)

are to show students that random sampling does not eliminate sampling

variability but does eliminate bias by moving the center of the sampling

distribution to the parameter of interest.

By question (w) students should believe that a method using a small

random sample is better than using larger nonrandom samples. Practice 3.1.1 uses a well-known historical

context to drive this point home (You can also discuss Gallup’s ability to predict both the Digest results and the actual election

results much more accurately with a smaller sample).

Investigation 3.1.2 is meant as a quick introduction to

alternative sampling methods – systematic, multistage, and stratified. This investigation is optional, but you may

even choose to talk students through these ideas but it’s useful for them to

consider methods that are still statistically random but that may be more

convenient to use in practice.

In the remainder of the text, we do not consider how to make

inferences from any sampling techniques other than simple random sampling.

Investigation 3.1.3 introduces students to the second key

advantage of using random sampling: not only does it eliminate bias, but it

also allows us to quantify how far we expect sample results to fall from

population values. This investigation

continues the exploration of samples of words from the Gettysburg address using a java applet to

take a much larger number of samples and to more easily change the sample size

in exploring the sampling distribution of the sample mean. Students should also get the visual for a

third distribution (beyond the sample and the empirical sampling distribution),

the population. The questions step students through the sampling process in the

applet very slowly to ensure that they understand what the output of the applet

represents (e.g., what does each green square involve). The focus here is on the fundamental

phenomenon of sampling variability, and on the effect of sample size on

sampling variability; we are not leading students to the Central Limit Theorem

quite yet. A common student difficulty

is distinguishing between the sample size and the number of samples so you will

want to discuss that frequently.

Questions (m)-(p) attempt to help students to continue to think in terms

of statistical significance by asking if certain sample results would be

surprising; this reinforces the idea of an empirical p-value that arose in both Chapters 1 and 2. You may consider a simple PowerPoint

illustration to help them focus on the overall process behind a sampling

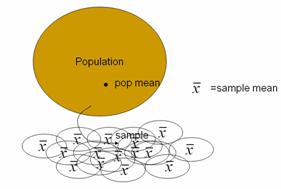

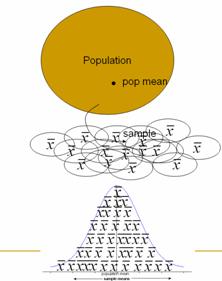

distribution (copy here), e.g.:

This exploration is continued in Investigation 3.1.4 but for

sample proportions. Again the focus is on

exploring sampling variability and sample size effects through the applet

simulation. Beginning in (e) students

are to see that the hypergeometric probability distribution describes the exact

sampling distribution in this case.

Students again use Minitab to construct the theoretical sampling

distribution and expected value which they can compare to the applet simulation

results. Students continue this

comparison by calculating the exact probability of certain values compared to

the proportion of samples simulated.

Questions (o) and (p) ask students to again consider questions of what

inferences can be drawn from such probability calculations. The subsequent practice problems address

another common student misconception, that the size of the population always

affects the behavior of the sampling distribution. In parts (a) and (c) of Practice 3.1.10,

students consider using a sample of size 20 (and 2 nouns) instead of 100

words. We want students to see that with

large populations, the characteristics of the sampling distribution do not

depend on the population size (you will need to encourage them to ignore small

deviations in the empirical values due to the finite number of samples).

In Investigation 3.1.5 students put what they have learned

in the earlier investigations together by using the hypergeometric distribution

to make a decision about an unknown population parameter based on the sample

results and again consider the issue of statistical significance. In class discussion you will need to emphasize

that this is the real application, making a decision based on one individual

sample, but that their new knowledge of the pattern of many samples is what

enables them to make such decisions (with some but not complete certainty). (Remind them that they usually do not have

access to the population and that they usually only have one sample, but that

the detour of the previous investigations was necessary to begin to understand

the pattern of the sampling distribution.)

In this investigation they are also introduced to other study design

issues such as nonsampling errors. The

graphs on p. 199 provide a visual comparison of the theoretical sampling

distribution for different parameter values.

You may want to circle the portion of the distribution to the right of

the arrow, to help students see that the observed sample result is very unusual

for the parameter value on the left (p

= .5) but not for the one on the right (p

= 2/3). The discussion tries to

encourage students to talk in terms of plausible values of the parameter to

avoid sloppy language like “p is probability is equal to

2/3.” You may want to remind them of

what they learned about the definition of probability in Chapter 1 and how the

parameter value is not what is changing in this process. The goal is to get students to thinking in

terms of “plausible values of the parameter based on the sample results.”

As discussed above, Exploration 3.1 asks students to use

Minitab to examine the sampling distribution resulting from different sampling methods. This could work well as a paired lab to be

completed outside of class to further develop student familiarity and comfort

with Minitab. One primary goal is for

students to learn that stratification aims to reduce variability in sample

statistics. Students should have

completed the optional Investigation 3.1.2 before working on this exploration.

At this point in the course you could consider a data

collection project where students are asked to take a sample from a larger

population for a binary variable where they have a conjecture as to the

population probability of success. Click

here

for an example assignment.

Section 3.2: Sampling

from a Process

Timing/Materials:

In this section we transition from sampling from a finite population to sampling from a process

which motivates the introduction of the binomial probability distribution to

replace the hypergeometric distribution as the mathematical model in this

setting. Beginning in Investigation

3.2.2 we rely heavily on a java applet for simulating binomial observations and

use both the applet and Minitab to compute binomial probabilities. This section should take 50-60 minutes,

especially if you give them some time to practice Minitab on their own.

Investigation 3.2.1 presents a variation on a study that

will be analyzed in more detail later.

You may wish to replace the photographs at petowner.html (for Beth

Chance, correct choice is in the middle) with your own. The goal here is to introduce a Bernoulli

process and Bernoulli random variable; introduction of the binomial

distribution waits until the next investigation. Investigation 3.2.2 presents a similar

simulation but in a more artificial context which is then expanded to develop

the binomial model. Rather than use the

“pop quiz” with multiple choice answers but with no questions as we do, you

could alternatively present students with a set of very difficult multiple

choice questions for which you feel quite confident they would have to guess

randomly at the answers. You can play up

this example though, telling students to begin answering the questions

immediately - they will be uncomfortable with the fact that you have not shown

them any questions! You can warn them

that question 4 is particularly tough J. The point is for the students to guess

blindly (and independently) on all 5 questions.

You can then show them an answer key (we randomly generate a different

answer key each time) to have them (or their neighbor) determine the number of

correct answers. You can also tease the

students who have 0 correct answers that they must not have studied. You may want to lead the students through

most of this investigation. Student

misconceptions to look are for are confusing equally likely outcomes for the

sample space with the outcomes of the random variable, not seeing the

distinction between independence and constant probability of success, and

incorrect application of the complement rule.

The probability rules are covered very briefly. If you desire more probability coverage in

this course, you may wish to expand on these rules.

In this investigation, we again encourage use of technology

to help students calculate probabilities quickly and to give students a visual

image of the binomial distribution.

Questions (x) and (y) help students focus on the interpretation of the

probability and how to use it to make decisions (is this a surprising result?),

so this is a good time to slow down and make sure the students are

comfortable. These questions also ask

students to consider and develop intuition for the effects of sample size. They

will again need to be reminded as in the hint in question (x) of the correct

way to apply the complement rule. This

comes up very often and causes confusion for many students. Question (aa) is also a difficult one for

students but worth spending time on. The

subsequent practice problems provide more practice in identifying the

applicability of the binomial distribution and the behavior of the binomial

distribution for different parameter values.

Be specific with students as you use “parameter” to refer to both the

numerical characteristic of the population and of the probability model.

Section 3.3: Exact

Binomial Inference

Timing/Materials: This section will probably take about 3

hours, depending on how much exploring you want students to do vs. leading them

through. Many students will struggle more

than usual with all of the significance testing concepts and terminology

introduced here, and they also need time to become comfortable with binomial

calculations. Ideally students will have

access to technology to carry out some of the binomial probability calculations

(e.g., instructor demo, applet, or Minitab).

Access to the applet is specifically assumed in Investigations 3.3.4 and

3.3.5 (for the accompanying visual images).

Students are also introduced to the exact binomial p-value and confidence interval calculation in Minitab. Investigation 3.3.7 uses an applet to focus

on the concept of type I and type II errors.

Much of this investigation can be skipped though the definitions of type

I and type II errors are assumed later.

Investigation 3.3.1 has students apply the binomial model to

calculate p-values. We again have students work with questions